In a recent article on this site, we’ve argued that evolution doesn’t undermine belief in God, that the truth of Christianity doesn’t stand or fall with the theory of evolution, and that Christians really shouldn’t get so worked up about it. In another article, we’ve presented arguments against Intelligent Design even though we think there is a case for design from biology (it’s just that it doesn’t require rejecting evolution).

Still, evolution is an interesting topic and recently I wrote a paper on simulation results for a simple model of cumulative selection in evolution. While a bit different from the usual sorts of articles on this site, some readers might be interested in it so I thought I’d post a link to it. It’s based on the so-called Weasel Program discussed by Richard Dawkins in chapter 3 of his book The Blind Watchmaker. I read this many years ago and a few years back I started playing around with this myself. Dawkins doesn’t provide details of exactly how he went about the simulations so my goal wasn’t to try to repeat what he did or even to try to figure out what he did. I just wanted to do something similar and then so how it behaved when some of the parameters in the model were changed.

In Dawkins discussion he takes a phrase from Shakespeare:

Methinks it is like a weasel

and rightly points out that if you have a monkey randomly typing a sequence of characters of the same length as the desired phrase it is going to take a very, very long time to get the answer! Even with the power of modern computers it isn’t feasible as the timescales are far too vast. In fact, it’s an interesting exercise to write a program to see how long it takes to find a four letter word just by random guesses, then a five letter word, and so on – it runs out of steam very quickly indeed. Cumulative selection is the key. Take a random sequence and make a number of copies of it with some mutations (changes in characters). Then select the copy that has most matches with the target phrase and use it to start a new generation, and keep repeating the process. Doing this, Dawkins achieved the answer in about 50 generations.

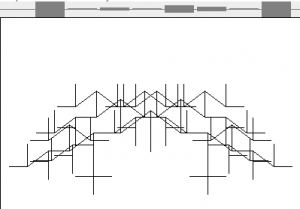

In my implementation, if the characters that match the target mutate at the same rate as those that don’t, the best result I can get is about 730 generations on average. Hence, Dawkins must have done something different. For example, perhaps he had more copies in each generation (I used 10) or perhaps he had a lower mutation rate for characters that match the target than those that don’t. More interesting, however, is how this result of 730 changes as the mutation rate changes. The number of generations required increases whether the mutation rate increases or decreases. For example, if the mutation rate changes from 0.028 (where the result is 730) to 0.04, over half a million generations are required to reach the target.

A slightly more sophisticated genetic algorithm also shows similar behaviour. In fact, in some cases it is much more sensitive, the mutation rate needs to lie within a very narrow band otherwise the number of generations required increases dramatically and in some cases the target cannot be reached at all. Sensitivity of this kind seems to be well understood in the world of evolutionary computing and so my results are not new in that sense, but it was still interesting (at least to me) to explore this in a bit more detail in the context of Dawkins’ scenario.

What’s the significance of all this? It certainly doesn’t show evolution is false! But it does raise questions about some of Dawkins’ claims. Other much more extensive work on evolutionary computing does question evolutionary gradualism (but not evolution as such) of the sort advocated by Dawkins. See ref. [5] in the paper for example. (For further details see here where the first chapter is available and makes for very interesting reading about evolutionary gradualism.)

Also, even though this is only a very simple model, it shows a lot of sensitivity to parameters. While it is far from clear that it would be warranted to extrapolate this to evolution in general, it is suggestive, and raises the question of whether life might depend on biological parameters lying within very narrow ranges in a similar way to that in which various parameters in physics are ‘fine-tuned’ for life. It is interesting to note that Simon Conway Morris, a leading figure in evolutionary biology, has suggested that something akin to fine-tuning occurs in biology. In his case, he points to evolutionary convergence and argues that evolution might be a much more structured and constrained process than had previously been thought.[1]

[1] See his Life’s Solution: Inevitable Humans in a Lonely Universe (Cambridge: Cambridge

University Press, 2003).